The first time I heard "serverless computing," I thought someone had finally cracked the code. We'd transcended physical hardware. Apps were just vibes and good intentions floating in the digital ether.

Then I learned the truth: there are absolutely servers involved. Lots of them. You just don't see them, don't manage them, and definitely don't cry over them at 3 AM when they crash.

Serverless is the tech industry's version of "sugar-free" candy — technically accurate, but misleading if you don't read the fine print.

Want a deep dive? Read AWS Lambda and serverless functions explained for technical details on how Lambda actually works.

What Serverless Actually Means

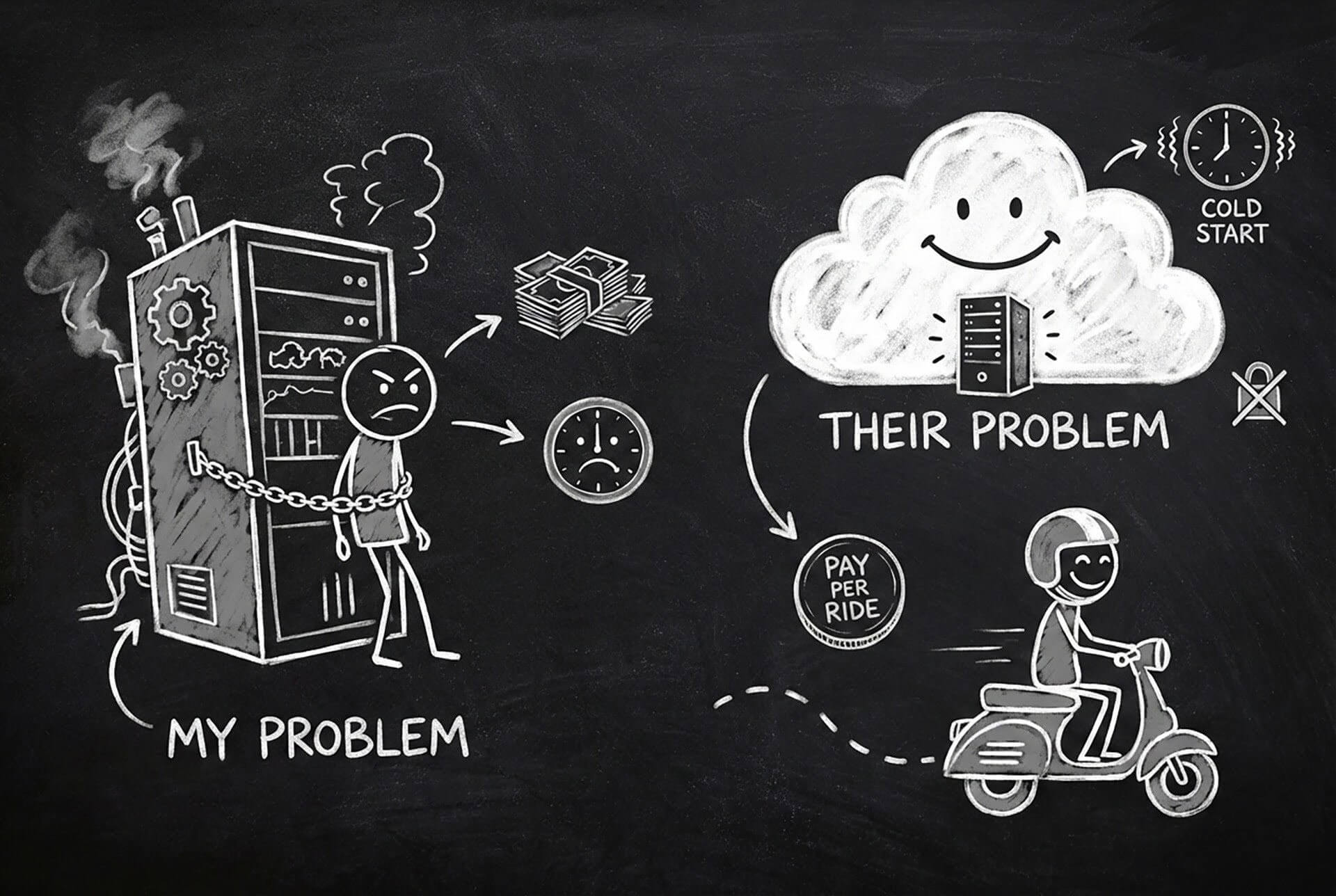

Serverless computing is a model where you write code, deploy it, and the cloud provider handles literally everything else. You don't provision servers, you don't configure operating systems, you don't even think about capacity planning.

You just write a function, upload it, and it runs when triggered. Like magic. Expensive, occasionally frustrating magic.

Think of it like this: remember when you had to download music, store it on your device, manage storage space, and organize playlists? Then Spotify came along and said, "Just stream it. We'll handle the rest."

Serverless is Spotify, but for code execution.

How It Works (The Boda Boda Analogy)

Normal server setup is like owning a boda boda. You buy it, maintain it, fuel it, and it sits in your compound even when you're not riding. The bike is YOURS. You're responsible for everything.

Serverless is like using those app-based boda services. You don't own the bike. You don't maintain it. You just request a ride when you need it, pay for that specific trip, and forget about it until next time.

The boda still exists. It's just not your problem.

When you deploy a serverless function:

- You write the code (like setting your destination)

- You upload it to a cloud provider (like requesting the boda)

- The function sits dormant, costing you nothing (the boda waits at the stage)

- Something triggers it — a user action, a scheduled job, an event (you climb on)

- The function runs, does its thing, and stops (you arrive and the boda leaves)

- You only pay for the execution time (you only pay for the ride you took)

No servers to maintain. No idle costs when nothing's running. You just pay for actual usage.

Why Developers Are Losing Their Minds Over This

1. Zero Infrastructure Management

Remember when deploying an app meant:

- Setting up a server

- Installing dependencies

- Configuring firewalls

- Setting up load balancers

- Praying nothing breaks

With serverless? You write code. You deploy. Done. It's like the difference between building a house from scratch vs. just renting a furnished Airbnb.

2. Automatic Scaling

Your app goes viral overnight? With traditional servers, you'd be scrambling to add capacity before everything crashes.

With serverless? The platform automatically spins up as many instances as needed. One user? One function runs. One million users? One million functions run. No panic. No manual intervention.

It's like matatus magically multiplying when there's a crowd, then disappearing when the stage clears. Although we all know the opposite is true for matatus.

3. Pay-Per-Execution

This is the real game-changer. You're not paying for a server that sits idle 90% of the time. You pay per request, per execution, per millisecond of compute time.

For side projects and startups, this is beautiful. Your hobby project that gets 50 requests a month? That'll cost you less than a soda. Your app could sit unused for months and cost you basically nothing.

4. Focus on Code, Not Infrastructure

Developers can spend their time building features instead of babysitting servers. No more "it works on my machine" debates. No more server maintenance windows. Just code.

The Catch (Because There's ALWAYS a Catch)

Serverless sounds too good to be true because, well, parts of it are.

1. Cold Starts (AKA The Annoying Wait)

When a function hasn't run in a while, the platform needs to wake it up. This takes time — sometimes a few hundred milliseconds, sometimes several seconds.

It's like calling that friend who always says "I'm 5 minutes away" when they haven't even left the house yet.

For some apps, this delay is fine. For others (like real-time applications), it's a deal-breaker.

2. Vendor Lock-In (Again)

Each cloud provider has its own serverless implementation:

- AWS Lambda

- Google Cloud Functions

- Azure Functions

They're similar but not identical. Code written for one doesn't easily port to another. You're locked in tighter than a Nairobi parking spot during rush hour.

3. Debugging Is a Nightmare

When something goes wrong in a traditional server, you can SSH in and poke around. With serverless? Good luck. You're staring at logs hoping they tell you something useful.

It's like trying to fix a car when you're not allowed to open the hood.

4. Cost Can Spiral

For low-traffic apps, serverless is cheap. For high-traffic apps, costs can EXPLODE. You're paying per request, and requests add up fast.

It's like M-Pesa transaction fees. One transaction? Whatever. Ten thousand transactions? Suddenly you're funding Safaricom's quarterly profits.

Learn cloud cost optimization strategies to avoid serverless bill shock - monitoring and cost controls are essential.

5. Execution Time Limits

Most serverless functions have time limits — usually between 5 to 15 minutes. If your code takes longer, too bad. Function gets killed.

You can't run long-running jobs or background tasks easily. It's designed for quick, event-driven operations.

When to Use Serverless (And When to Run Away)

Good Use Cases:

- APIs and microservices: Handle requests, return responses, done

- Scheduled jobs: Run a task every hour/day/week

- Event-driven processing: User uploads a photo, trigger a function to resize it

- Chatbots and webhooks: Respond to external events

- Prototypes and MVPs: Build fast without infrastructure overhead

See a real example: Building serverless APIs with Lambda and Node.js walks through creating production-ready REST APIs using serverless architecture.

Bad Use Cases:

- Long-running computations: Anything taking more than a few minutes

- High-frequency, low-latency apps: Cold starts will ruin you

- Predictable, consistent traffic: If your app is always busy, a traditional server is probably cheaper

- Applications requiring persistent connections: WebSockets, gaming servers, etc.

The Real Talk: Is Serverless the Future?

Kind of, but not entirely.

Serverless is perfect for certain workloads. It's revolutionizing how we build event-driven architectures. It's making it possible for solo developers to build and scale apps that would've required a whole team a decade ago.

But it's not replacing traditional servers. It's complementing them.

Most companies use a mix: serverless for spiky, unpredictable workloads, and traditional servers for steady, predictable ones. Hybrid approach. Best of both worlds.

Compare serverless with container options in EC2 vs ECS vs Fargate to choose the right compute model for your workload.

For orchestrated workloads with complex requirements, Kubernetes offers more control than serverless but requires more management overhead.

Getting Started (If You're Curious)

If you want to try serverless without commitment:

- AWS Lambda: Free tier gives you 1 million requests per month. Perfect for experimenting.

- Vercel/Netlify Functions: Deploy serverless functions with your frontend. Dead simple.

- Google Cloud Functions: Similar to Lambda, slightly different pricing.

Write a simple function (like a URL shortener or a random quote generator), deploy it, and see how it feels. You'll either love the simplicity or hate the limitations. Only one way to find out.

The Bottom Line

Serverless computing isn't about eliminating servers. It's about eliminating YOUR responsibility for managing them. Someone still has to run those machines — it's just not you.

For the right use case, it's liberating. For the wrong use case, it's expensive and frustrating.

Know the difference. Choose accordingly.

Takeaway: Serverless lets you run code without managing servers. You write functions, deploy them, and only pay when they execute. Perfect for event-driven workloads and prototypes, but comes with trade-offs like cold starts, vendor lock-in, and potential cost explosions. Use it for the right job, not every job.